将ESP32-CAM的视频流直播到外网

现有不足

现在的Arduino里的示例代码,会将ESP32-CAM自身设置成一个web服务器,客户端连到ESP32-CAM的web服务器就能获得实时的视频流。但是这个方法有一个巨大的缺陷,就是如果想在外网看视频流的话就无法实现。

因此本文就提供一个方法来实现在外网看到内网ESP32-CAM的视频流。实现的前提是需要有一个在外网的云服务器。

Arduino实现部分

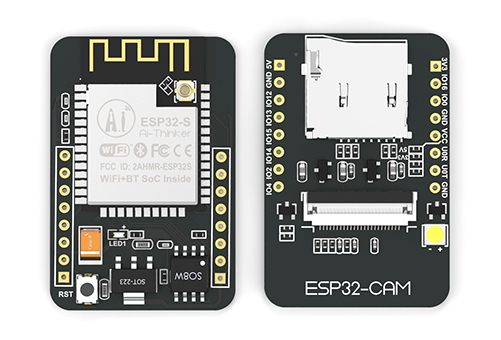

选用的是如下的开发板,Arduino下载程序时开发板选项选择ESP32 Wrover Module。

另外需要通过arduino IDE安装ArduinoWebsockets库才能编译以下的示例代码。

接线方法

USB-TTL的3V3接ESP32-CAM的3V3

USB-TTL的GND接ESP32-CAM的GND

USB-TTL的RXD接ESP32-CAM的U0T

USB-TTL的TXD接ESP32-CAM的U0R

如果要下载程序,则需要将ESP32-CAM的GPIO0接地,下载程序完成后则断开重启。

初始化WiFi功能

首先初始化ESP32-CAM的WiFi功能,这里使用的是MultiWiFi,可以一次指定多个WiFi名和密码,由ESP32自行决定连接哪个WiFi,这种适合ESP32会被带到不同的网络的情况下使用,如果只在家里或其它固定的地方,可以选择简单的实现。

#include

#include

// 定义多个WiFi名和密码的配置

typedef struct {

const char *name;

const char *pwd;

} WiFiItem_t;

WiFiItem_t WiFiTable[] = {

{ "WiFiName1", "" },

{ "WiFiName2", "12345678" },

{ "WiFiName3", "87654321" }

};

// 算出WiFi配置个数

const unsigned int WiFiCnt = sizeof(WiFiTable) / sizeof(WiFiItem_t);

// 定义一个多WiFi的全局变量

WiFiMulti wifiMulti;

// 定义初始化WiFi函数

void setup_wifi() {

for (uint8_t t = 3; t > 0; t--) {

Serial.printf("[SETUP] WAIT %d...\n", t);

Serial.flush();

delay(1000);

}

for (uint8_t i = 0; i < WiFiCnt; i++) {

Serial.printf("Add AP %s %s\n", WiFiTable[i].name, WiFiTable[i].pwd);

wifiMulti.addAP(WiFiTable[i].name, WiFiTable[i].pwd);

}

}

添加捕获相机数据功能

#include "esp_camera.h"

// 定义引脚

#define PWDN_GPIO_NUM 32

#define RESET_GPIO_NUM -1

#define XCLK_GPIO_NUM 0

#define SIOD_GPIO_NUM 26

#define SIOC_GPIO_NUM 27

#define Y9_GPIO_NUM 35

#define Y8_GPIO_NUM 34

#define Y7_GPIO_NUM 39

#define Y6_GPIO_NUM 36

#define Y5_GPIO_NUM 21

#define Y4_GPIO_NUM 19

#define Y3_GPIO_NUM 18

#define Y2_GPIO_NUM 5

#define VSYNC_GPIO_NUM 25

#define HREF_GPIO_NUM 23

#define PCLK_GPIO_NUM 22

// 初始化相机

void setup_camera() {

camera_config_t config;

config.ledc_channel = LEDC_CHANNEL_0;

config.ledc_timer = LEDC_TIMER_0;

config.pin_d0 = Y2_GPIO_NUM;

config.pin_d1 = Y3_GPIO_NUM;

config.pin_d2 = Y4_GPIO_NUM;

config.pin_d3 = Y5_GPIO_NUM;

config.pin_d4 = Y6_GPIO_NUM;

config.pin_d5 = Y7_GPIO_NUM;

config.pin_d6 = Y8_GPIO_NUM;

config.pin_d7 = Y9_GPIO_NUM;

config.pin_xclk = XCLK_GPIO_NUM;

config.pin_pclk = PCLK_GPIO_NUM;

config.pin_vsync = VSYNC_GPIO_NUM;

config.pin_href = HREF_GPIO_NUM;

config.pin_sscb_sda = SIOD_GPIO_NUM;

config.pin_sscb_scl = SIOC_GPIO_NUM;

config.pin_pwdn = PWDN_GPIO_NUM;

config.pin_reset = RESET_GPIO_NUM;

config.xclk_freq_hz = 20000000;

config.pixel_format = PIXFORMAT_JPEG;

config.frame_size = FRAMESIZE_QVGA;

config.jpeg_quality = 10;

config.fb_count = 1;

esp_err_t err = esp_camera_init(&config);

if (err != ESP_OK) {

Serial.printf("Camera init failed with error 0x%x", err);

return;

}

}

关于frame_size的定义如下:

FRAMESIZE_96X96, // 96x96

FRAMESIZE_QQVGA, // 160x120

FRAMESIZE_QCIF, // 176x144

FRAMESIZE_HQVGA, // 240x176

FRAMESIZE_240X240, // 240x240

FRAMESIZE_QVGA, // 320x240

FRAMESIZE_CIF, // 400x296

FRAMESIZE_HVGA, // 480x320

FRAMESIZE_VGA, // 640x480

FRAMESIZE_SVGA, // 800x600

FRAMESIZE_XGA, // 1024x768

FRAMESIZE_HD, // 1280x720

FRAMESIZE_SXGA, // 1280x1024

FRAMESIZE_UXGA, // 1600x1200

// 3MP Sensors

FRAMESIZE_FHD, // 1920x1080

FRAMESIZE_P_HD, // 720x1280

FRAMESIZE_P_3MP, // 864x1536

FRAMESIZE_QXGA, // 2048x1536

// 5MP Sensors

FRAMESIZE_QHD, // 2560x1440

FRAMESIZE_WQXGA, // 2560x1600

FRAMESIZE_P_FHD, // 1080x1920

FRAMESIZE_QSXGA, // 2560x1920

添加websocket功能

#include <ArduinoWebsockets.h>

using namespace websockets;

WebsocketsClient client;

指定自己web云服务器的地址

const char* websockets_server_host = "xxxx.com";

const uint16_t websockets_server_port = 80;

将这些功能组合起来

void setup() {

Serial.begin(115200);

setup_camera();

setup_wifi();

}

void loop() {

// 等到WiFi可用为止

uint8_t wifiState = wifiMulti.run();

if ((wifiState != WL_CONNECTED)) {

Serial.printf("[WiFi] connected to wifi failed. ret %d\n", wifiState);

delay(1000);

return;

}

if (client.available()) {

// 在这里添加捕获视频数据并发送给服务器

// capture_video();

client.poll();

} else {

// 如果websocket client不可用就重新建立一个到服务器的websocket client

Serial.println("Connected to Wifi, Connecting to server.");

bool connected = client.connect(websockets_server_host, websockets_server_port, "/video");

if (connected) {

Serial.println("Connected!");

} else {

Serial.println("Not Connected!");

}

// 接收服务器响应的回调函数,目前没什么作用

client.onMessage([&](WebsocketsMessage message) {

Serial.print("Got Message: ");

Serial.println(message.data());

});

}

}

这些功能组合在一起后还有一个重要功能没有实现,就是捕获摄像头数据,并将这些数据通过websocket的client发送到服务器。

捕获摄像头数据并发送

void capture_video() {

camera_fb_t * fb = NULL;

fb = esp_camera_fb_get();

if (NULL == fb) {

Serial.println(F("Camera capture failed"));

} else {

client.sendBinary((const char *)fb->buf, fb->len);

esp_camera_fb_return(fb);

fb = NULL;

}

}

添加此函数后稍微修改一下loop,取消注释capture_video();即可。

服务器端实现

实现了ESP32的逻辑,接下来就需要实现web服务器,以支持在外网实时查看视频流了。

以下代码极其简单,就不做注释和解释了。

package main

import (

"fmt"

"log"

"net/http"

"time"

"github.com/gorilla/websocket"

)

var upgrader = websocket.Upgrader{

ReadBufferSize: 10240,

WriteBufferSize: 1024,

// 解决跨域问题

CheckOrigin: func(r *http.Request) bool {

return true

},

}

var frameChan chan []byte

func init() {

frameChan = make(chan []byte, 16)

}

const (

partBOUNDARY = "123456789000000000000987654321"

streamContentType = "multipart/x-mixed-replace;boundary=" + partBOUNDARY

streamBoundary = "\r\n--" + partBOUNDARY + "\r\n"

streamPart = "Content-Type: image/jpeg\r\nContent-Length: %d\r\n\r\n"

)

func watchHandler(w http.ResponseWriter, r *http.Request) {

var err error

w.Header().Set("Content-Type", streamContentType)

w.Header().Set("Access-Control-Allow-Origin", "*")

flusher, _ := w.(http.Flusher)

for i := 0; ; i++ {

var data []byte

timeout := time.NewTimer(time.Millisecond * 100)

select {

case data = <-frameChan:

case <-timeout.C:

continue

}

fmt.Fprintf(w, "%v", streamBoundary)

fmt.Fprintf(w, streamPart, i)

_, err = w.Write(data)

flusher.Flush()

if err != nil {

break

}

}

}

func videoHandler(w http.ResponseWriter, r *http.Request) {

var err error

var ws *websocket.Conn

log.Printf("video\n")

ws, err = upgrader.Upgrade(w, r, nil)

if err != nil {

log.Printf("err: %v", err)

return

}

defer ws.Close()

for {

t, p, e := ws.ReadMessage()

log.Printf("type %v data len %v err %v", t, len(p), e)

if e != nil {

break

}

select {

case frameChan <- p:

log.Printf("put frame")

default:

log.Printf("drop frame")

}

}

}

func main() {

defer fmt.Println("Program Exited...")

m := http.NewServeMux()

m.HandleFunc("/watch", watchHandler)

m.HandleFunc("/video", videoHandler)

server := http.Server{

Addr: ":80",

Handler: m,

}

server.ListenAndServe()

}

通过canvas直播视频流

首先再为web服务器注册两个处理函数

m.HandleFunc("/canvas", canvasHandler)

m.HandleFunc("/stream", streamHandler)

其中/canvas用来查看直播视频,/stream用来给/canvas的页面的websocket连接发送视频流

func streamHandler(w http.ResponseWriter, r *http.Request) {

var err error

var ws *websocket.Conn

ws, err = upgrader.Upgrade(w, r, nil)

if err != nil {

log.Printf("err: %v", err)

return

}

defer ws.Close()

for {

var data []byte

select {

case data = <-frameChan:

default:

continue

}

err = ws.WriteMessage(websocket.BinaryMessage, data)

if err != nil {

break

}

}

}

func canvasHandler(w http.ResponseWriter, r *http.Request) {

tmpl := template.Must(template.ParseFiles("./canvas.html"))

var data = map[string]interface{}{}

tmpl.Execute(w, data)

}

另外是canvas.html的实现,注意替换wsURL的值为web服务器的地址

CANVAS VIDEO